You might be wondering why I have waited so long to write about DeepSeek. My plan has never been speed with this publication, but rather clear thinking and strategic planning. While dust is still in the air after the launch of DeepSeek, a clearer picture is emerging—clear enough for me to speculate on its implications. My thoughts on DeepSeek and its implications for AI companies and equity markets will be presented in three parts: 1) why DeepSeek signals commoditization, 2) Jevons paradox and why robotics are crucial for understanding the next phase of AI, and 3) implications for equity markets and AI-related stocks.

DeepSeek is a surprising event

A good technical run-through of DeepSeek from a technical point of view is the one on Stratechery by Ben Thompson. DeepSeek comes with a number of innovations that also indicate that the researchers were facing constraints on the availability of chips. Note this quote from Ben Thompson:

Here’s the thing: a huge number of the innovations I explained above are about overcoming the lack of memory bandwidth implied in using H800s instead of H100s. Moreover, if you actually did the math on the previous question, you would realize that DeepSeek actually had an excess of computing; that’s because DeepSeek actually programmed 20 of the 132 processing units on each H800 specifically to manage cross-chip communications. This is actually impossible to do in CUDA. DeepSeek engineers had to drop down to PTX, a low-level instruction set for Nvidia GPUs that is basically like assembly language. This is an insane level of optimization that only makes sense if you are using H800s.

The around USD 6 million training cost question is something that has been debated as an unrealistic number and recently from DeepMind (Google’s AI unit) CEO Demis Hassabis. Regardless of the true figure, what cannot be questioned is that DeepSeek costs a lot less to train because of its various innovations, and its inference (the output you get after a prompt) costs significantly less than competing models. Demis Hassabis does not think it shows “actual new scientific advance”, but just impressive work. DeepSeek may scientifically not be a major game changer, but technically (implementation) and economically it is a game changer, because it will change the technological narrative of AI. This is no longer a technology that the US technology sector will control with significant barriers to entry.

US technologists have been quick to point out the obvious drawbacks of DeepSeek when you ask it about sensitive political topics in China. This is obviously a problem for certain use cases, but the vast majority of AI use cases will not involve political questions at all. Also, the DeepSeek innovations can be copied and the model can be retrained to overcome the political censorship. In any case, I doubt many users find the political aspect troubling given what AI models are intended to solve for ordinary users.

I also find it striking that US technologists are complaining that DeepSeek might have infringed on their data and models through distillation, when OpenAI and other AI companies have been accused of scrapping all the Internet’s data without permission or payment to content creators. Distillation is likely also fueling the divorce between Microsoft and OpenAI. Why would Microsoft fund endless data centers to train expensive models if this is not where the economic moat is, and when Microsoft just want to provide inference for its billions of users. Google’s former CEO, Eric Schmidt, was out saying the other day that the entire AI industry must focus on open-source to compete with China.

Another impressive technical detail about DeepSeek is that the full version is less 1TB, so you are able to hold a compressed version of the “entire” Internet on a cheap memory chip that costs less than USD 200. The architecture of DeepSeek also allows for running reasonably well AI models locally on Apple M1 chips or even a Raspberry Pi 5. The point is not the level of sophistication right now, but that we following a technology vector that will lead to bigger and satisfactory AI models over time that can run locally with no need for super advanced GPUs. It will be a bit like at a certain level no consumer cares about cameras with insane amount of megapixels because the marginal benefit is too small to notice, or care about.

Demand for AI post DeepSeek and why robotics matter

With DeepSeek, a couple of things will likely happen in the AI industry. First, it is likely to reduce the incremental CapEx needed for additional revenue by the hyperscalers, something we touched on in the post AI is transforming software companies. For now, the major hyperscalers like Microsoft, Meta, Amazon, and Google have all indicated that they maintain their CapEx plans for 2025, which means that they are reinforcing the narrative from before DeepSeek. This is dangerous for management because investors are beginning to question the profitability of these massive investments. It is also dangerous for these technology companies because the current CapEx intensity will lead to lower operating margins in the future, and lower ROIC. This logic is also one of the reasons why the US unemployment rate among software developers is deteriorating. You must cut other operating expenses when your fixed-capital investments go up.

After the shock of DeepSeek, Microsoft’s CEO Satya Nadella was quick to state that DeepSeek will trigger the Jevons paradox, which simply stated is the past observations that as a resource becomes more efficient the overall demand increases even more offsetting the efficiency gains. This has happened many times in history so I think it is a reasonable expectation to have around AI technology. Now, Jevons paradox does not necessarily lead to great business models and significant returns for shareholders. That was clearly the case during the expansion of railroads and the same with the PC industry on the hardware side in 1990s.

There is another aspect of Jevons paradox and the growth of AI. Technologists in Silicon Valley have been used to for decades to an almost infinite supply side dynamic as all demand has easily been absorbed by supply, as software and digital deliverance have low marginal resource drag and costs. With the emergence of large language models the software industry will expand in such a way that the physical world will suddenly become a constraint through energy and chips. The most apparent physical constraint that could cause a road block for AI growth is electricity.

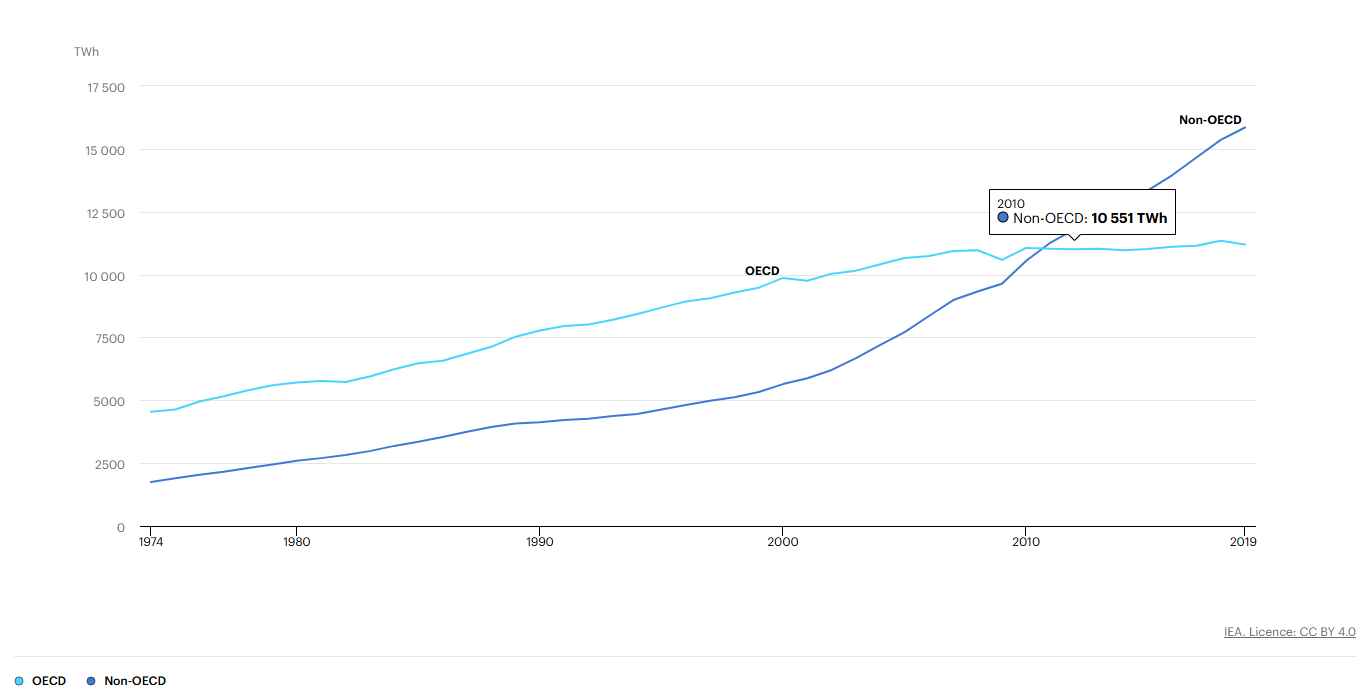

The total gross electricity production in the world from 1974 to 2019 grew annualized by 3.3%, and AI data centers are expected to more than double their consumption to 500 TWh in 2027. So there is a real mismatch in demand and supply at one point. AI data center electricity consumption is still only around 2% of total consumption by 2027, but the growth rate combined with bottlenecks on the electricity grid due to transformers and local electricity production could mean supply constraints are coming fast for AI workloads. Electricity is as important as food and water for the modern world, so no society will allow AI to jeopardize electricity for local households and industry.

The apparent road ahead to commoditization of foundational models means that the likely competitive advantage in AI may not lie where we thought it was going to be. I don’t think it is a coincidence that Jensen Huang, CEO and co-founder of Nvidia, is increasingly talking more about robotics in his recent talks. It is also increasingly the obsession of Elon Musk and it was one of the two main original goals of OpenAI until Sam Altman figured out that large language models would be easier to commercialize than robotics.

I’m betting that it will not be in the digital world where AI will make the biggest difference. It will most certainly be in the physical world. Those AI companies that crack the code on robotics hardware, integrated with AI, will allow the industry leader to get to economics of scale (in robotics production) first and thereby creating the must needed moat to generate excessive profits in the future. I also subscribe to the idea that AI cannot go AGI without physical awareness. Another reason why robotics with AI is necessary is due to our current demographical trajectory. We will simply need more labor in the future to expand our physical world

Nvidia is ground zero of the DeepSeek ‘bomb’

All of the above has consequences for the equity market and AI related stocks. DeepSeek has potentially validated the early panic inside Google when insiders said “We Have No Moat, And Neither Does OpenAI”. Equity valuations of any company are about the future which is essentially discounting a whole distribution of different future scenarios. The scenario that AI does not have an imminent economic moat and that open-source AI models win has a higher weight today than before DeepSeek. As a result, AI related companies thought to have a strong moat must reflect this uncertain future. The equity market has indeed reflected this reality.

As the chart above shows, the two biggest casualties after the DeepSeek moment have been Nvidia and Tesla (Musk’s impairment of the Tesla brand is also playing its part). If open source AI wins and more efficiency gains can be innovated in AI models the narrative of AI only being possible with ever more powerful Nvidia GPUs collapses. Given the new reality, or at least the higher probability of a different future, the equity market’s high expectations for Nvidia make the chipmaker the most dangerous position to hold in any portfolio.

Tesla’s comeback over the past year has been a mix of Elon Musk reinforcing the narrative that Tesla will win in self-driving car technology and robotics in addition to expected tailwinds from being close to the Trump administration. My view is that both narratives are wrong. Being close to Trump will be an own goal for Elon Musk and Tesla due to the brand damage and less EV credits in the future. DeepSeek opens up a path to a future where self-driving capabilities become a commodity like digital AI models. If that scenario plays out, then the car industry will remain what it has been for half a century—a commoditized, capital-intensive sector with marketing shuffling market share around in a tit-for-tat game.

The big winner judged by the market is Meta. DeepSeek has vindicated its open source AI approach with its own Llama model. This model has just been used together with a custom-built AI chip from Cerebras to deliver inference of 1,200 tokens per second in partnership with Perplexity to fight against Google Search. This news reinforces my view that more custom-made AI chips are coming which is bad for Nvidia and that LLMs may significantly disrupt Google Search sooner than anticipated. I no longer use Google Search.

In theory, you would think open-source AI winning would be great for Microsoft and Google because they could use less CapEx and thus increase free cash flows. However, the logic is more that the market had priced in economic moats that are not likely to be there, so equity valuations must go lower.

ASML is slightly higher since the DeepSeek moment reflecting that overall chip demand will continue to grow and that its stronghold in lithography technology will persist. Salesforce the biggest perceived winner together with Meta in the days after DeepSeek, but the market has since revised that optimism. My view is that using open source AI models like Llama with cheaper custom-made AI chips will allow Salesforce to roll out AI features at lower costs compared to expectations from before DeepSeek which should be positively reflected in its equity valuation over time.

Electric utility stocks such as Constellation Energy is also one of the big casualties reflecting that the market has lowered its expectations for AI data center roll outs.

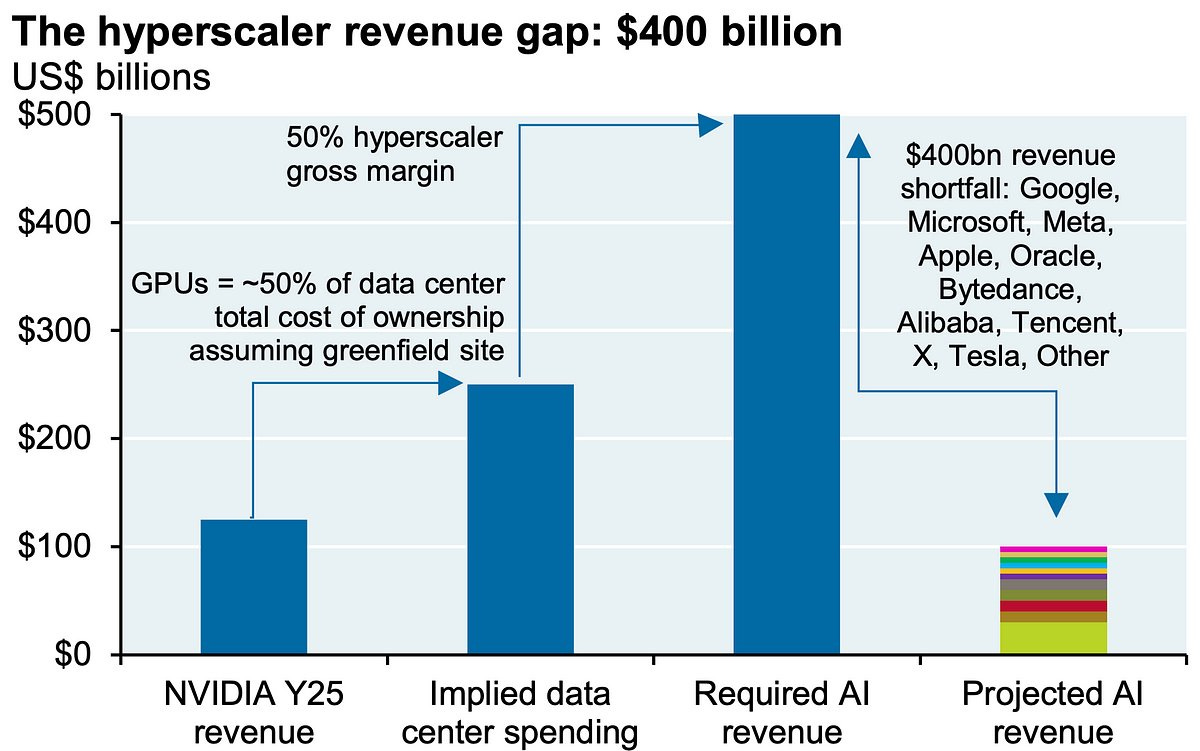

As I have said many times in the past couple of years, this period represents the AI infrastructure buildout phase and the long-term winners are likely not those we think that will win. This view is based on past technology cycles. The winners always emerge later in the technology cycle. The biggest risk to equities related to AI is still the path to a sustainable commercialization. We have what Sequoia Capital defines as the AI revenue gap (see chart below) and OpenAI has openly admitted that its current business model is not economically sustainable.

This is the end of my thoughts on AI, DeepSeek and the future. Whether these thoughts will age well is a good question. As said in the beginning, the dust is still in the air and narratives can still change many times this year. My conclusion is that DeepSeek will turn out to have been a pivotal moment in the early history of AI commercialization and completely alter the prevailing narrative on AI.

The next couple of updates

My goal to write once a week might be difficult to uphold in the coming weeks, but I will do my best. I have decided to launch my own equity fund here in Denmark, and I have now entered the more time-consuming part of the process, where numerous documents must be sent to the local regulator.

I have an extensive roadmap for all the things I want to share with you all, so in respect of time, I will need to set clear priorities. Unless a curve ball such as DeepSeek is thrown at the market, I am planning to write the following posts:

Why I am launching a new active equity fund and what my vision is.

A review of Poor Charlie’s Almanack, which is a collection of Charlie Munger’s thoughts on life and investing.

Palantir’s equity valuation as a primer for understanding future returns within the free cash flow framework described in previous posts.

Outcome variables vs. principal drivers of outcomes: A soft primer for a later post on causality vs. correlation.

After read Peter, I have more questions, because the hyperscalers didn’t change their CAPEX investments, and why Meta is a winner and Alphabet no, if they can use AI in a similar way, and happen the same between Msft and Salesfroce?

Very insightful! Good luck with everything Peter!